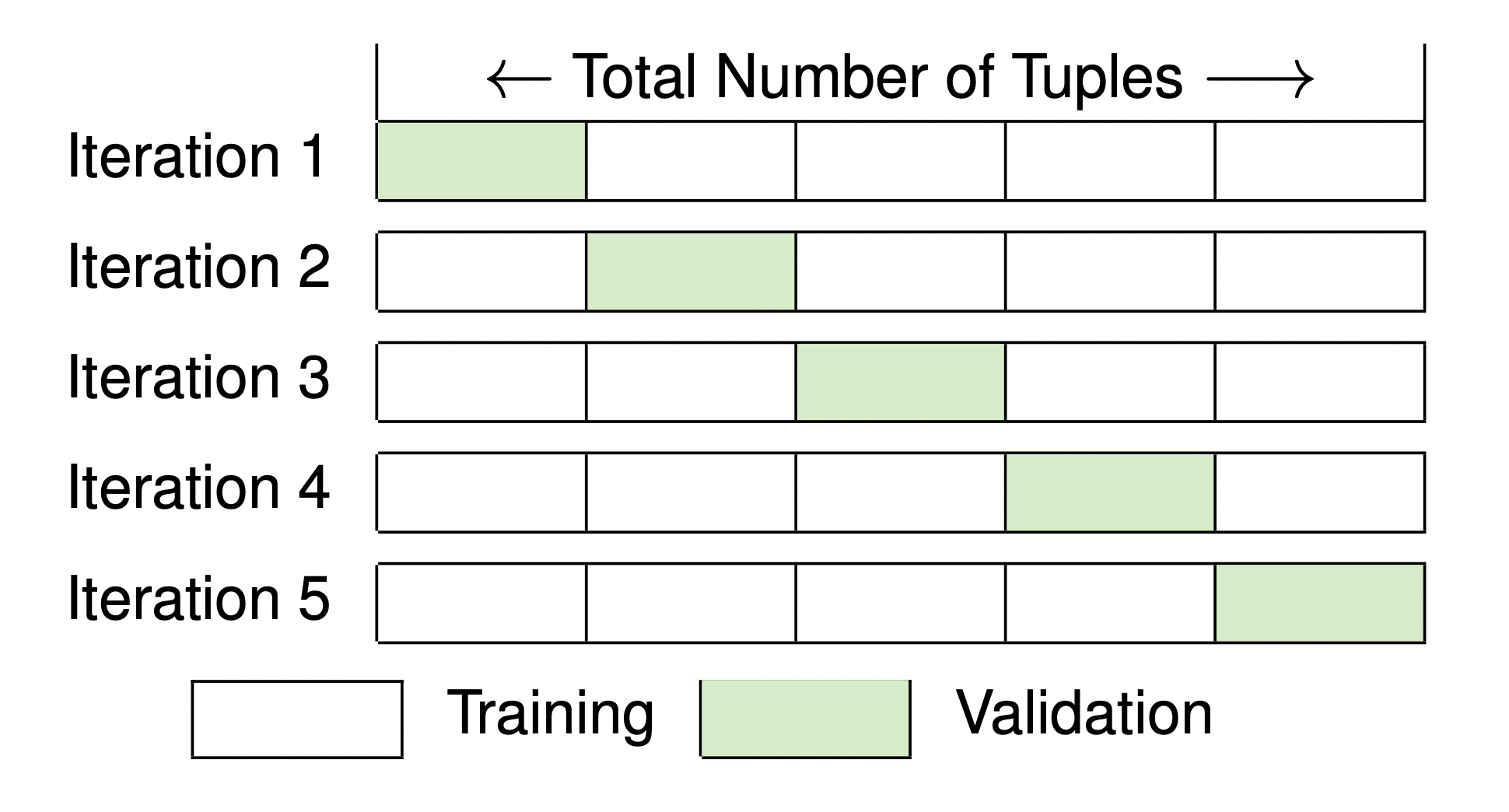

K-Fold Cross Validation

- shuffle data

- split data in folds (with each having approximately the same size)

- for each foldc(so times)

- use the fold as validation set

- use the rest to train the model

- train the model

- evaluate the model on the validation set → Performance Evaluation Metrics

- Take the average of all folds

This procedure ensures that your model has been validated on every sample of your Dataset and not only on a simple 30-70 split.

A good value for is 10 (empirical analysis).

For very small data sets use Leave-One-Out K-Fold Cross Validation.

Example