Partially Observable MDP

A Markov Decision Process together with a Sensor Model that has the Sensor Markov Property and is stationary, which means This models a partially observable and stochastic environment. As the Agent does not know which state it is in, it makes no sense to talk about policies that map concrete states to Actions. Thus we introduce the Optimal Policy as a function that maps belief states to actions instead.

Now the idea is to convert a POMDP into an MDP in Belief State space. Which means we want to have a Transition Model on the belief states and a reward function on the belief states.

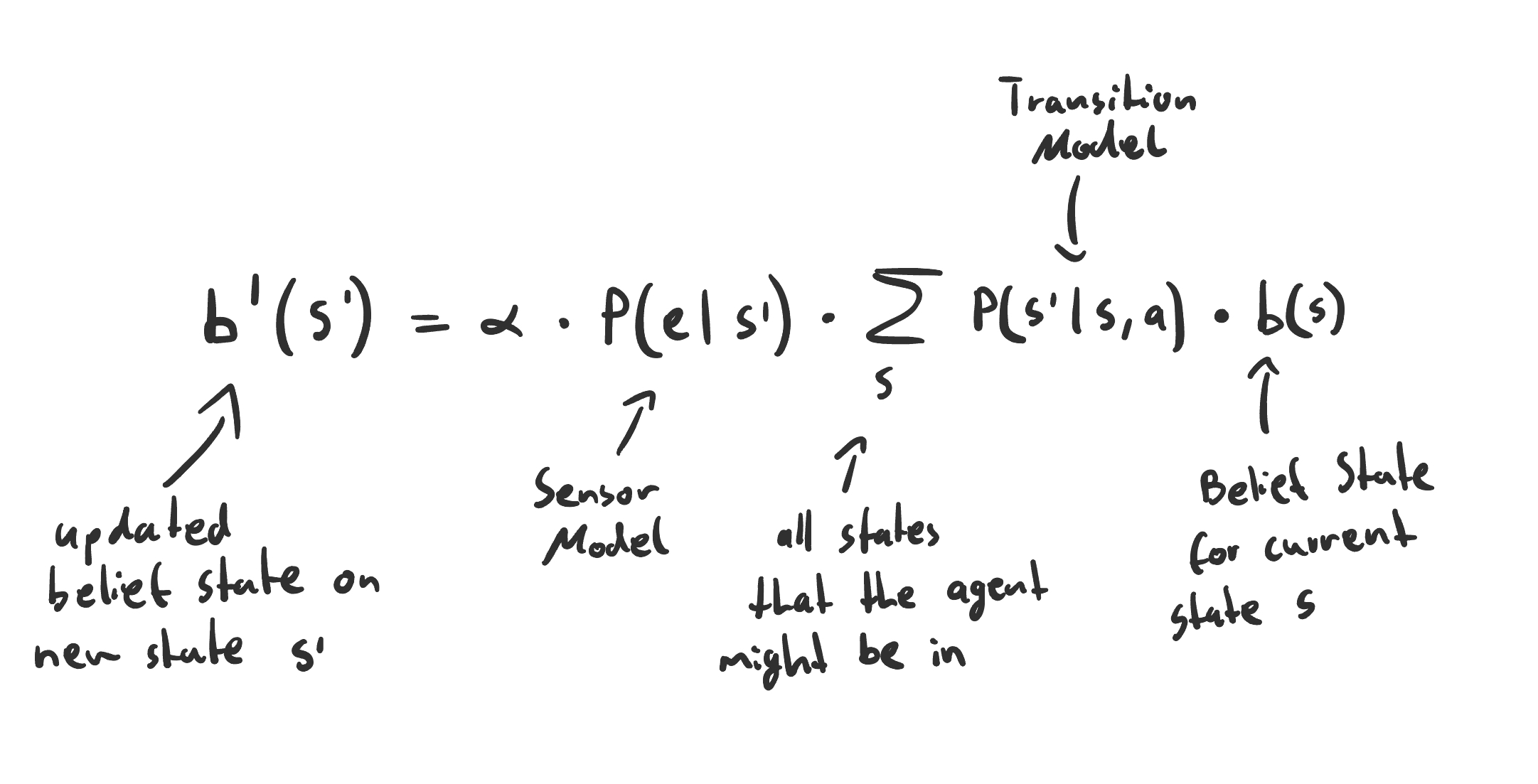

Filtering at the Belief State Level

Overview Formula

POMDP Decision Cycle

- Given current Belief State , execute Action

- Receive Percept

- Update Belief State with

Reducing POMDPs to Belief State MDPs

Transition Model at the Belief State level

P\left(b^{\prime} \mid b, a\right) & =P\left(b^{\prime} \mid a, b\right)=\sum_e P\left(b^{\prime} \mid e, a, b\right) \cdot P(e \mid a, b) \\ & =\sum_e P\left(b^{\prime} \mid e, a, b\right) \cdot\left(\sum_{s^{\prime}} P\left(e \mid s^{\prime}\right) \cdot\left(\sum_s P\left(s^{\prime} \mid s, a\right), b(s)\right)\right) \end{aligned}$$ where $P\left(b^{\prime} \mid e, a, b\right)$ is $1$ if $b^{\prime}=\operatorname{FORWARD}(b, a, e)$ and 0 otherwise. Reward function at the [[Belief State]] level. $$\rho(b):=\sum_s b(s) \cdot R(s)$$ The expected reward for the states the [[Rational Agent|Agent]] might be in. Now we reduced the POMDP to a [[Markov Decision Process]] with a [[Übergangsmatrix|Transition Model]] $$P\left(b^{\prime} \mid b, a\right)$$ and a reward function $$\rho(b).$$ This is actually observable now as the [[Belief State]] state is **always** observable. An [[Optimal Policy]] on this MDP is also an optimal policy on the POMDP.