Global Surrogate

Idea

Train a simple intrinsicly interpretable model to approximate the output of a more complex Black Box Model. Use this simple model to explain the models descision (not the real world).

- Selecte, create or sample an input dataset and generate predictions with the Black Box Model

- Train the surrogate model on this data to match the predictions

- Interpret the model

- Judge the relevance of the interpretation by the R-Squared error of the surrogate model. High error might lead to wrong explanations.

The sample selection plays a crucial role. Selecting samples only from one region of the feature space might lead to a local surrogate like LIME does on purpose.

Example

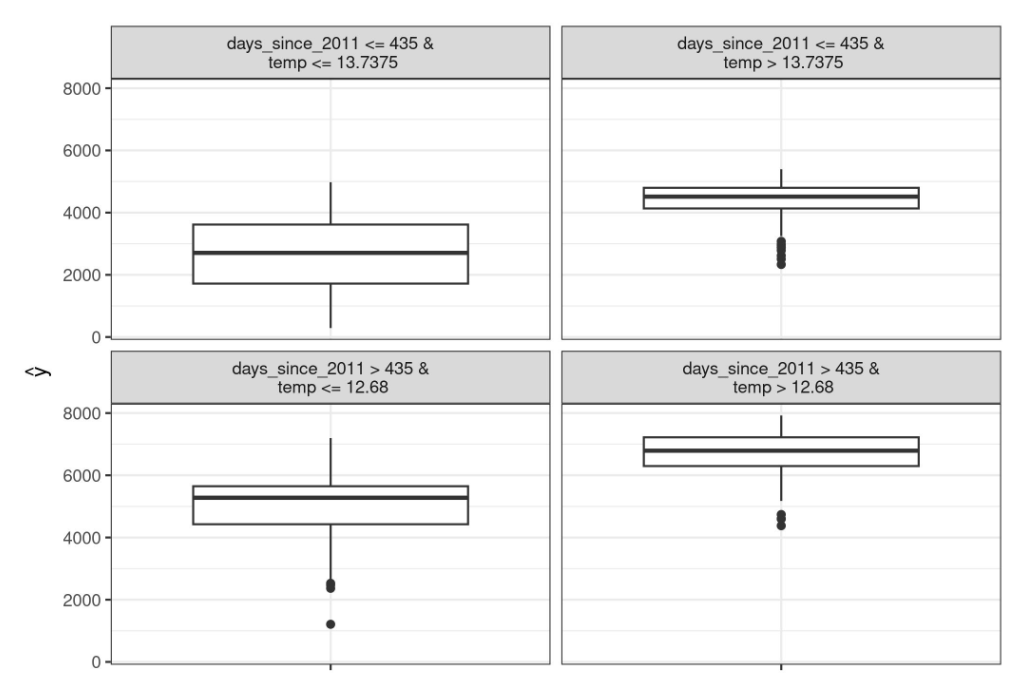

Train a Decision Tree on the predictions of a Support Vector Machine.

Pros

- flexible (Model-Agnostic, Post-Hoc)

- intuitive

- R-Squared to measure how good the interpretations can be

Cons

- conclusions only about the model, not the data

- where should the R-Squared cut-off be?

- how to choose a good subset of data?