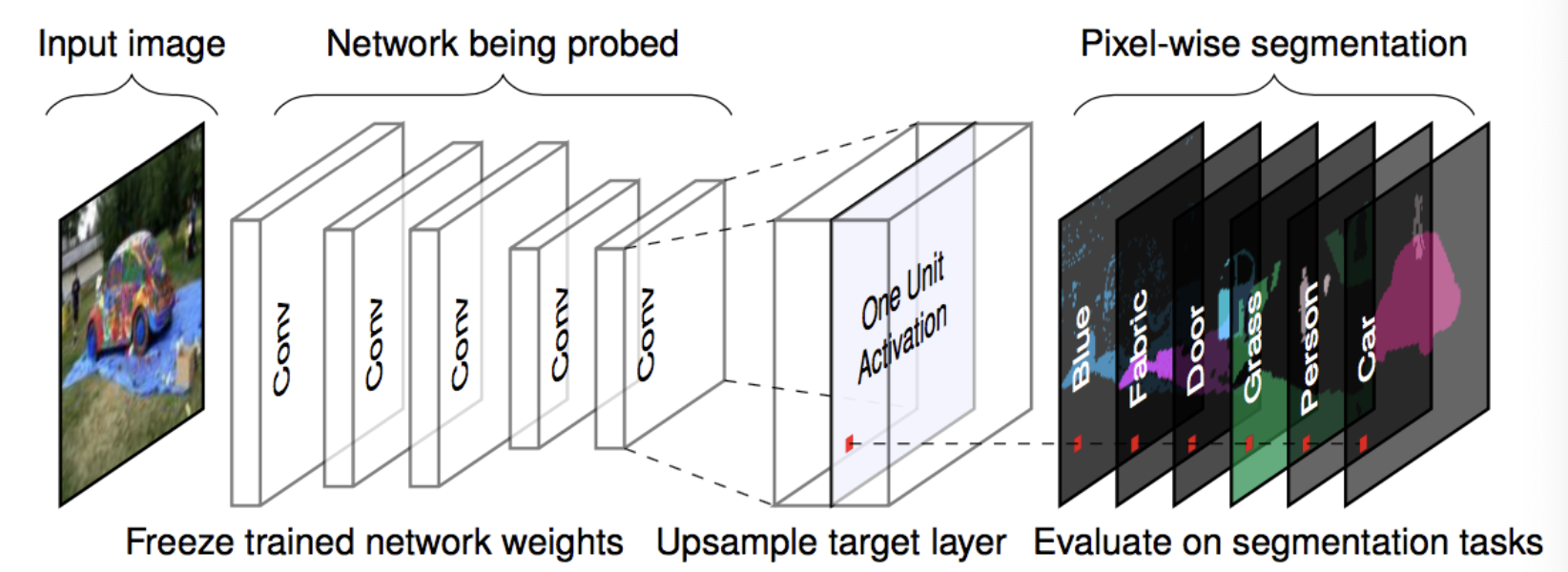

Network Dissection

Tries to answer the question whether a Neural Network actually understood the concepts that can be visualized with Activation Maximization. So it quantifies the Interpretability of a Unit.

- Get images with human-labeled visual concepts

- Measure the CNN channel activations for them

- Quantify the alignment of activations and labeled concepts

Alignment

Intersection over Union