Friedman’s H-statistic

If there is no interaction we can decompose the PDP into and the prediction function into Here means all features that are not .

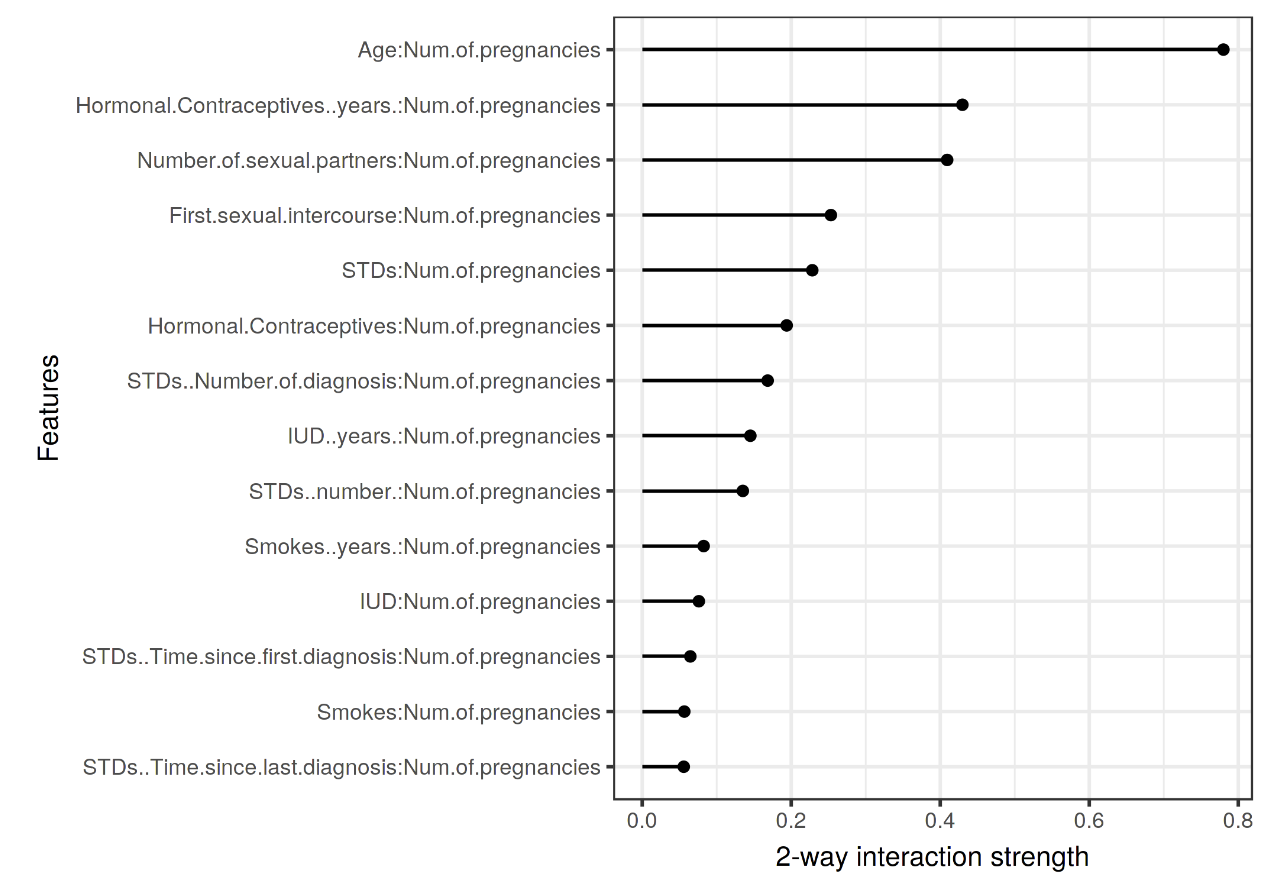

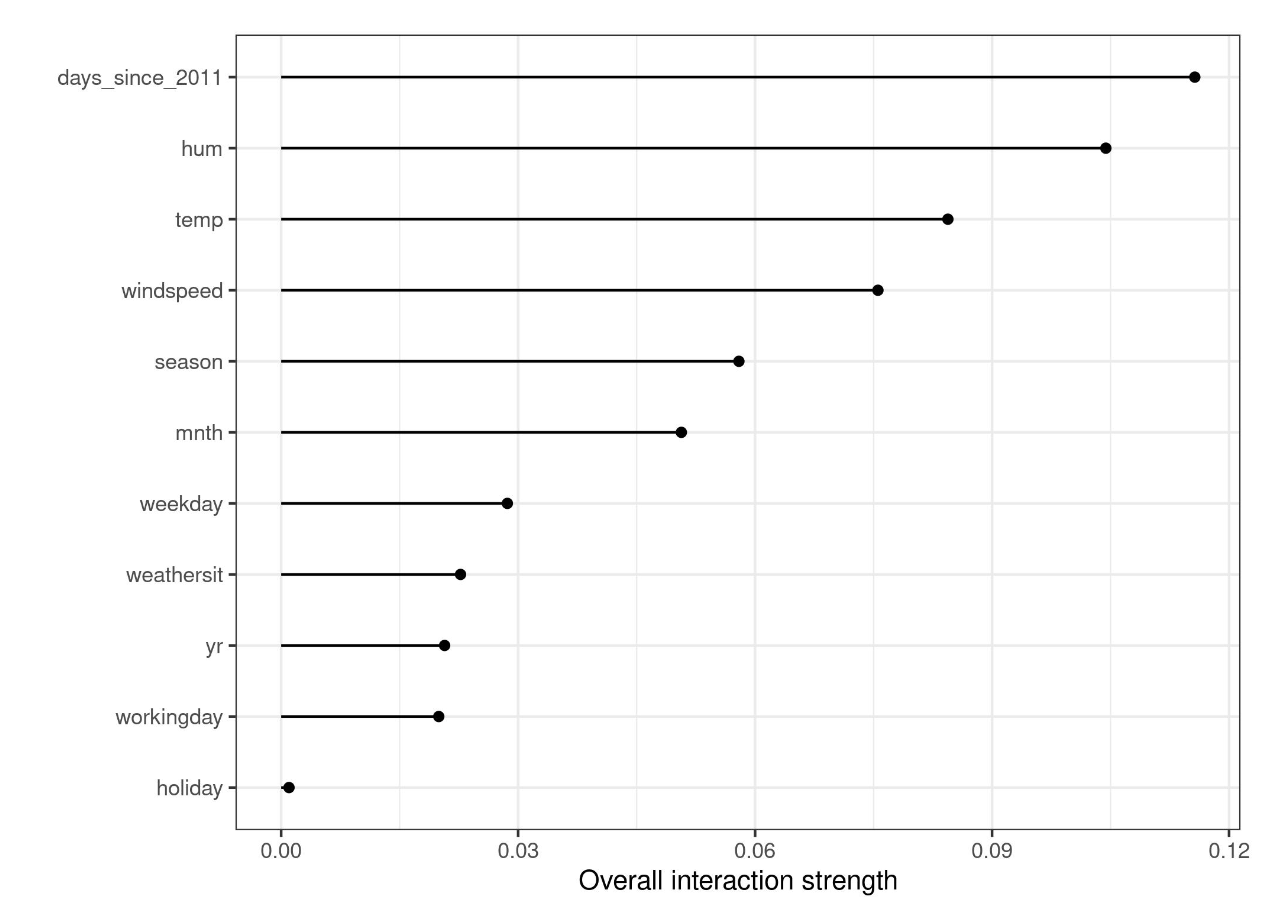

The two statistics are (interaction of feature with ) and (interaction of feature with all other features).

The statistic is expensive to evaluate, it takes and calls to the model.

Examples

Pros

- underlying theory

- meaningful interpretation

- dimensionless (comparable across features and models)

- detects all interactions

- arbitrarily high interactions

Cons

- computationally expensive

- estimates have variance

- no test for strong enough interaction

- same as PDP → Correlation can lead to high values