Logistic Regression

Logistic Regression is a Classification ML model. It is the application of Linear Regression to classification by feeding the output of the Linear Regression, the so called Log Odds into the Sigmoid Function to get class probabilities.

Regression of the parameter of a binary random variable (y).

We can interpret the output as a probability which is really nice for Explainability purposes. So for a Binary Classification we can choose 0.5 as a probability threshold for the two classes.

It uses Gradient Descent to find the global minimum of the Log-Likelihood.

The logistic update is

scikit-learn

You can use the argument multi_class="multinomial" to get a multiclass Classification.

You can use the argument C which is inversly proportional to the Regularisation parameter. So you can perform a kind of L2 shrinkage to penalize large weights (Overfitting).

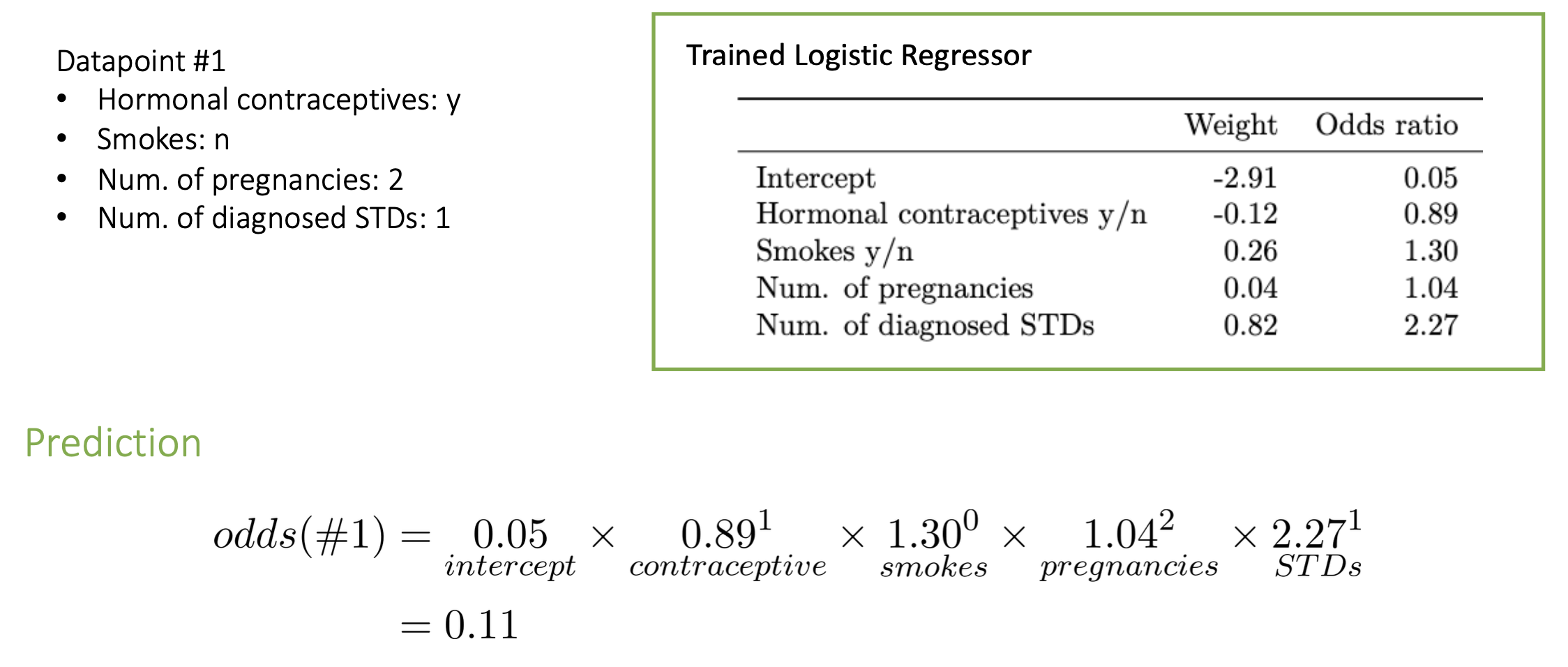

Explanations from Logistic Regression

See Odds Ratio for how to explain outcomes by increases in features.

- Numerical

- increase by one unit → changes odds multiplicatively by exp(weight)

- Binary

- same

- Categorical Data

- One-Hot-Encoding → same

- Intercept

- same

Problem of complete separation

If a feature can perfectly separate the classes, the logistic regression will not converge (because infinite weights would be needed).∑

→ Use Regularisation → Or just use a rule based approach and not ML

Calculating Odds by Hand