Gradient Descent

Finding a local minimum in a “mountain like” environment.

Gradient descent benefits from Standardization as the weight updates are fair between different features.

Concrete Algorithms

Approach:

- Start with random weights

- Calculate Direction of Steepest Descend (using Gradient)

- Step in that direction (learning rate)

- Repeat from step 2

![[CleanShot 2023-10-04 at 12.06.50@2x.png]]

Algorithm:

- Calculate loss

- Calculate the Gradient of the loss

- Subtract - times the Gradient of the loss ( = learning rate)

- Start at step 1

Cons

- multiple Local Minima will prevent you from finding the globales Minimum (there is no guarantee to finding an optimal solution)

- depends on random initialization

- depends on learning rate:

- high values → jumping over the minimum

- low values → never reach the minimum

NOTE

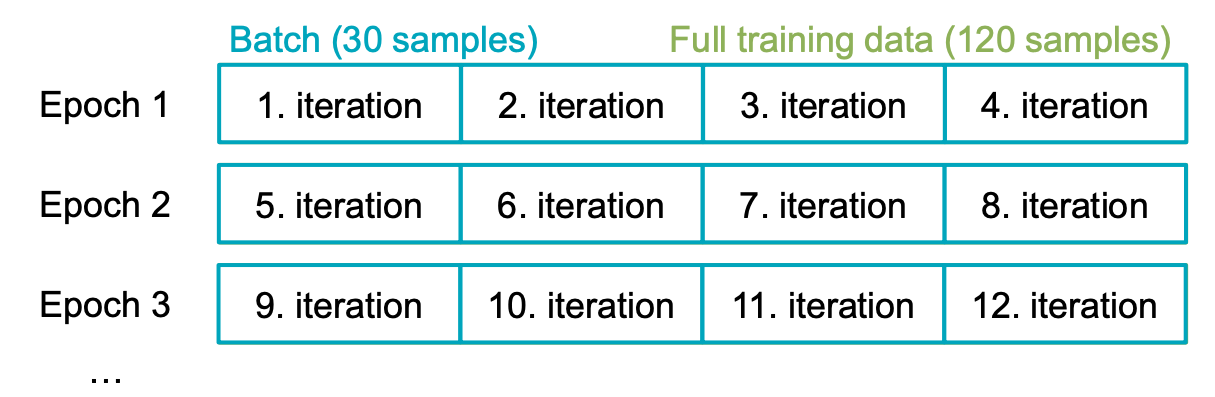

- For each epoch (= one loop over the training data)

- For each batch (= one small portion of the training data)

- Compute the error

- Compute the gradients

- Update the parameters using the gradients

Example

For the Mean Squared Error Loss Function we can derive gradient descent updates.

![[CleanShot 2023-10-04 at 12.09.12@2x.png]]

And for multiple examples we get

![[CleanShot 2023-10-04 at 12.10.19@2x.png]]

batch gradient descent learning rule for univariate linear regression.

The Concept of Batches Instead of computing the sum of losses on one complete epoch, which can be very expensive, we divide each epoch into multiple smaller pieces → batches. Usually the size of these batches is a fixed number.